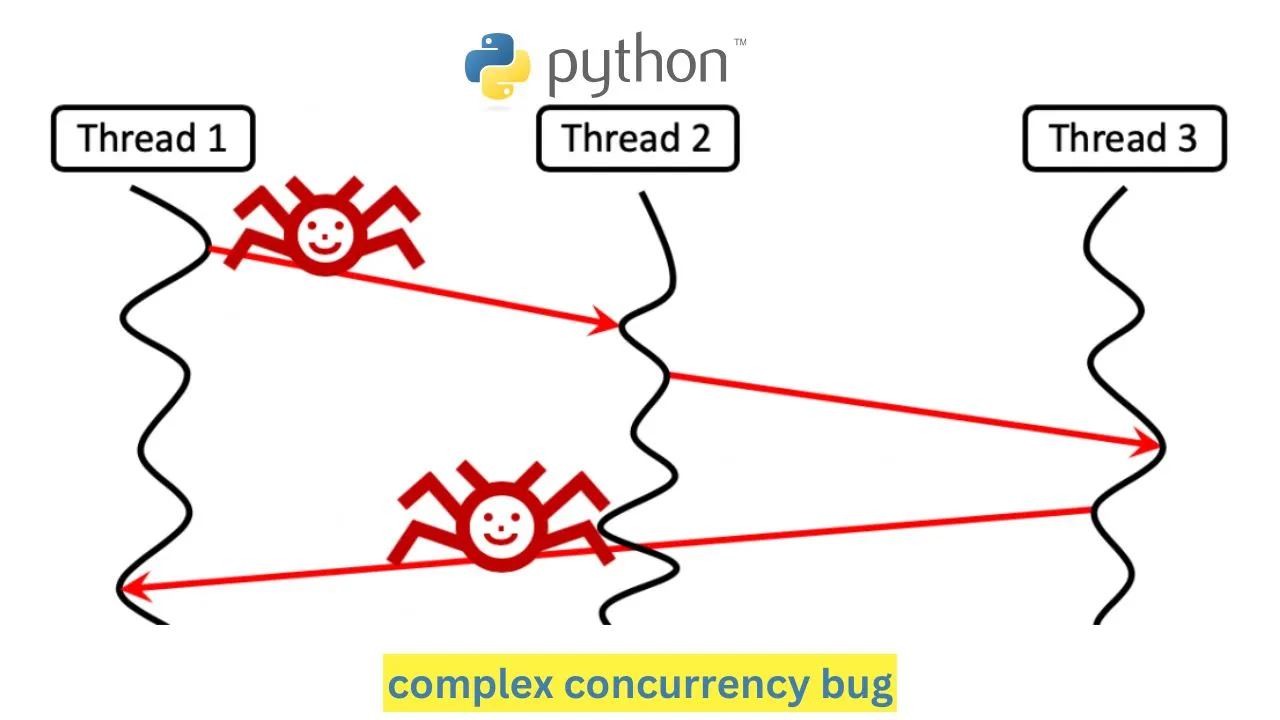

One complex bug I encountered involved a concurrency issue in a Python application with multithreading. The application was designed to process large datasets by spawning multiple threads to handle data parsing and computation. However, it intermittently failed with inconsistent outputs and occasional crashes, making the bug difficult to identify and reproduce.

Symptoms of the Bug

The symptoms were:

- Intermittent data corruption where processed data had missing or incorrect values.

- Occasional crashes with segmentation faults, especially under higher workloads.

- Race conditions that caused data to be overwritten by other threads, leading to incorrect results.

Initial Debugging Steps

- Reproduce the Issue: I began by creating a controlled test environment to consistently reproduce the issue. By increasing the workload and running multiple instances of the application, I could trigger the bug more frequently.

- Code Review and Logging: I reviewed the code, focusing on shared resources and critical sections. To identify where the issue was occurring, I added extensive logging to track thread operations and capture data processing at each stage.

Root Cause Analysis

Through this analysis, I identified three main factors:

- Shared Data Structure without Locking: The threads were accessing a shared dictionary to store results without proper synchronization. This led to race conditions where one thread would overwrite or read data that another thread was modifying.

- Inconsistent State due to Lack of Thread Safety: Some variables used in data processing were thread-unsafe, and their values were occasionally being corrupted when accessed concurrently.

- Deadlocks and Performance Bottlenecks: While debugging with logging, I observed deadlocks when multiple threads tried to acquire the same lock in different orders.

Complex Bug you Encountered in a Python

Below are code examples based on the debugging process described above. This includes handling shared data with locks, using thread-safe queues, and converting parts of the application to asynchronous handling with asyncio to avoid race conditions and improve concurrency management.

1. Using Locks for Shared Data in Multithreading

To manage shared data safely, we can use a threading.Lock to ensure only one thread modifies the data at a time.

import threading

# Shared data dictionary

shared_data = {}

data_lock = threading.Lock()

def thread_safe_update(key, value):

# Lock the shared resource during modification

with data_lock:

shared_data[key] = value # Only one thread can modify this at a time

def worker_thread(id):

for i in range(5):

thread_safe_update(f"thread-{id}-{i}", f"value-{i}")

print(f"Thread {id} updated key thread-{id}-{i}")

# Create and start multiple threads

threads = [threading.Thread(target=worker_thread, args=(i,)) for i in range(3)]

for t in threads:

t.start()

for t in threads:

t.join()

print("Final shared data:", shared_data)In this code, each thread safely updates shared_data by locking access to it using data_lock. This prevents race conditions.

2. Using a Thread-Safe Queue

To reduce the need for locks, you can use queue.Queue, which is thread-safe by design. Each thread can place items into the queue, and a single consumer thread can handle processing.

import threading

import queue

# Queue for thread-safe data handling

data_queue = queue.Queue()

def producer_thread(id):

for i in range(5):

item = f"data-from-thread-{id}-{i}"

data_queue.put(item)

print(f"Producer {id} added: {item}")

def consumer_thread():

while not data_queue.empty():

item = data_queue.get()

print(f"Consumer processed: {item}")

data_queue.task_done()

# Create producer threads

producers = [threading.Thread(target=producer_thread, args=(i,)) for i in range(3)]

for p in producers:

p.start()

for p in producers:

p.join()

# Create and run the consumer thread

consumer = threading.Thread(target=consumer_thread)

consumer.start()

consumer.join()Here, producer threads add items to data_queue, and a consumer thread processes each item. This avoids direct access to shared resources.

3. Using asyncio for Asynchronous Handling

In some cases, converting parts of the application to asynchronous handling can improve efficiency and simplify concurrency by reducing the need for explicit threads.

import asyncio

# Simulated asynchronous tasks for concurrent processing

async def async_task(task_id):

print(f"Starting async task {task_id}")

await asyncio.sleep(1) # Simulating I/O or long-running task

print(f"Completed async task {task_id}")

async def main():

tasks = [async_task(i) for i in range(5)]

await asyncio.gather(*tasks)

# Run the asynchronous tasks

asyncio.run(main())In this code, we use asyncio to run multiple asynchronous tasks concurrently. Each async_task runs independently without blocking others, and there’s no need for locks because each task operates separately within its coroutine.

4. Detecting Deadlocks and Using RLock for Reentrant Locking

For situations where a thread may need to acquire the same lock multiple times, threading.RLock (reentrant lock) can be used to prevent deadlocks.

import threading

lock = threading.RLock()

def nested_locking(id):

with lock:

print(f"Thread {id} acquired lock, entering nested lock")

with lock: # Reentrant lock allows this

print(f"Thread {id} acquired lock again in nested section")

threads = [threading.Thread(target=nested_locking, args=(i,)) for i in range(3)]

for t in threads:

t.start()

for t in threads:

t.join()Using RLock here allows a thread to re-acquire the lock within the same execution, preventing a deadlock if the thread needs to call a function that also requires the lock.

By using these techniques and code patterns, you can manage concurrency, avoid race conditions, and prevent deadlocks and memory leaks in Python applications.

Tools and Techniques Used for Debugging

- Threading and Concurrency Analysis: I used Python’s

threadinglibrary in conjunction with theloggingmodule to trace thread activity. This helped identify areas where multiple threads accessed shared resources. - Memory Profiler and CPU Profiler: I used memory and CPU profiling tools (like

memory_profilerandcProfile) to identify performance bottlenecks and ensure memory was managed correctly. These tools showed high memory usage due to unclosed resources and memory being held in deadlock situations. - GDB with Python Extensions: Since segmentation faults were involved, I used GDB (GNU Debugger) to analyze core dumps. GDB’s Python extensions allowed me to inspect Python objects within the C stack, which helped locate where segmentation faults occurred in relation to specific data structures.

Solution and Fixes

- Locking Mechanisms: I implemented locks (

threading.Lock) around critical sections where shared resources were accessed. This ensured that only one thread could modify the shared dictionary at a time, preventing race conditions. Additionally, I usedthreading.RLockfor reentrant locking, as some functions needed to acquire the lock multiple times. - Thread-safe Queues: I replaced direct shared data access with a

queue.Queue, which is thread-safe and manages locks internally. Instead of threads writing to the shared dictionary, they would put processed data into the queue, which a single worker thread would then consume and store. - Asynchronous Handling and Task Segmentation: I modified parts of the code to use asynchronous operations (via

asyncio) where feasible, enabling more efficient task handling and reducing reliance on multi-threading for tasks that did not need it.

Outcome

After implementing these changes, the application’s concurrency issues were resolved. The data corruption stopped, and segmentation faults no longer occurred. Using thread-safe data structures and improving lock management allowed the application to run efficiently even under heavy loads.

Lessons Learned

This experience reinforced the importance of:

- Using thread-safe data structures in concurrent applications.

- Limiting direct shared data access among threads.

- Profiling memory and CPU usage to detect bottlenecks early.

- Leveraging debugging tools like GDB for segmentation faults, especially in Python applications using C extensions or native libraries.

By applying these techniques, I was able to isolate the bug and ensure the application operated reliably under concurrent workloads.